参考:

https://github.com/lm-sys/FastChat

https://blog.csdn.net/qq128252/article/details/132759107

##安装

pip3 install "fschat[model_worker,webui]"

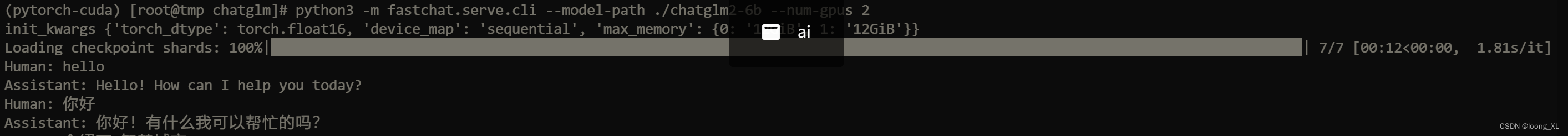

1、chatglm2-6b测试

python3 -m fastchat.serve.cli --model-path ./chatglm2-6b --num-gpus 2

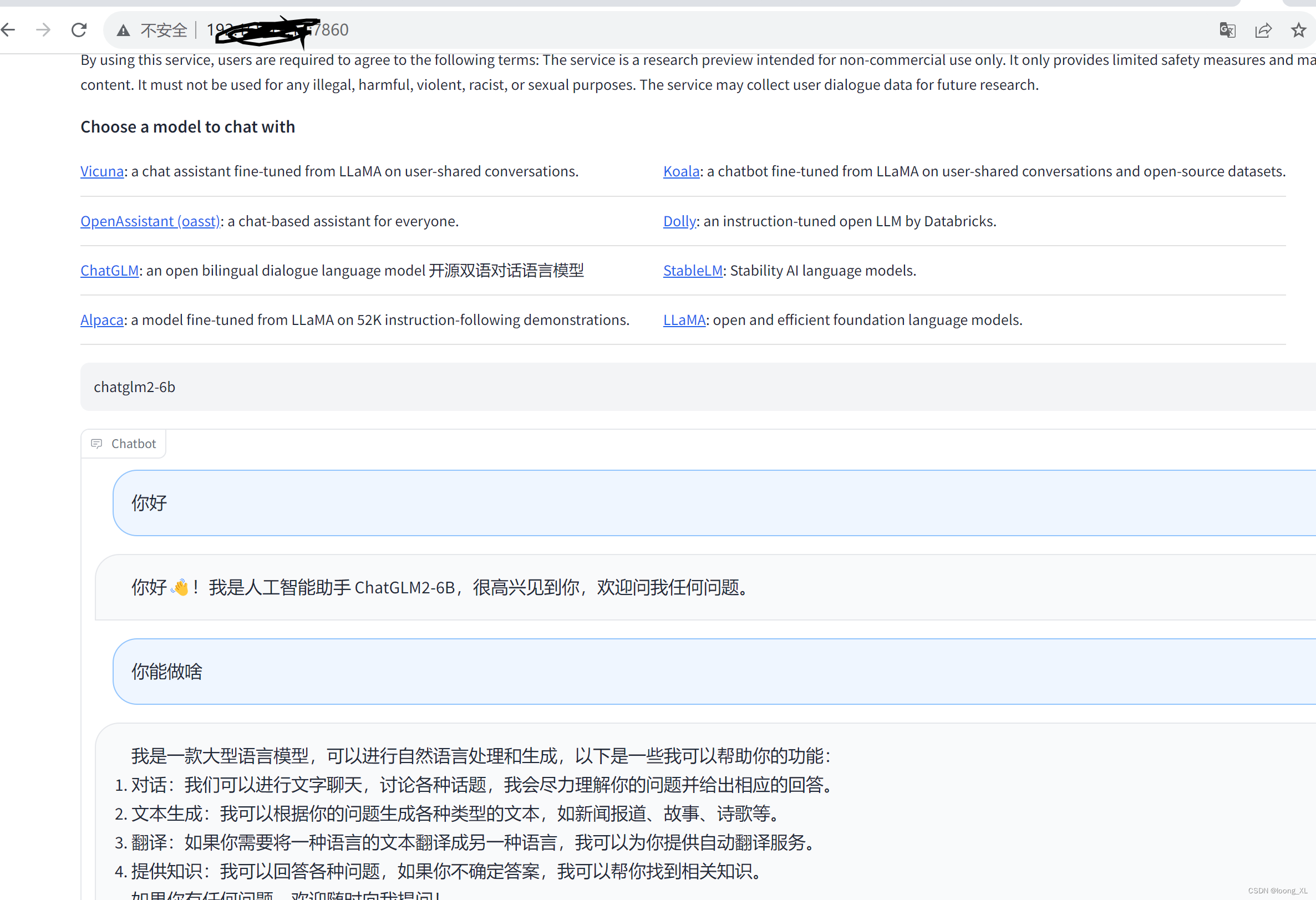

web使用

1)启动控制器

python3 -m fastchat.serve.controller

2)启动模型工作

python3 -m fastchat.serve.model_worker --model-path ./chatglm2-6b --num-gpus 2 --host=0.0.0.0 --port=21002

3)web服务启动

python3 -m fastchat.serve.gradio_web_server

打开网址查看:

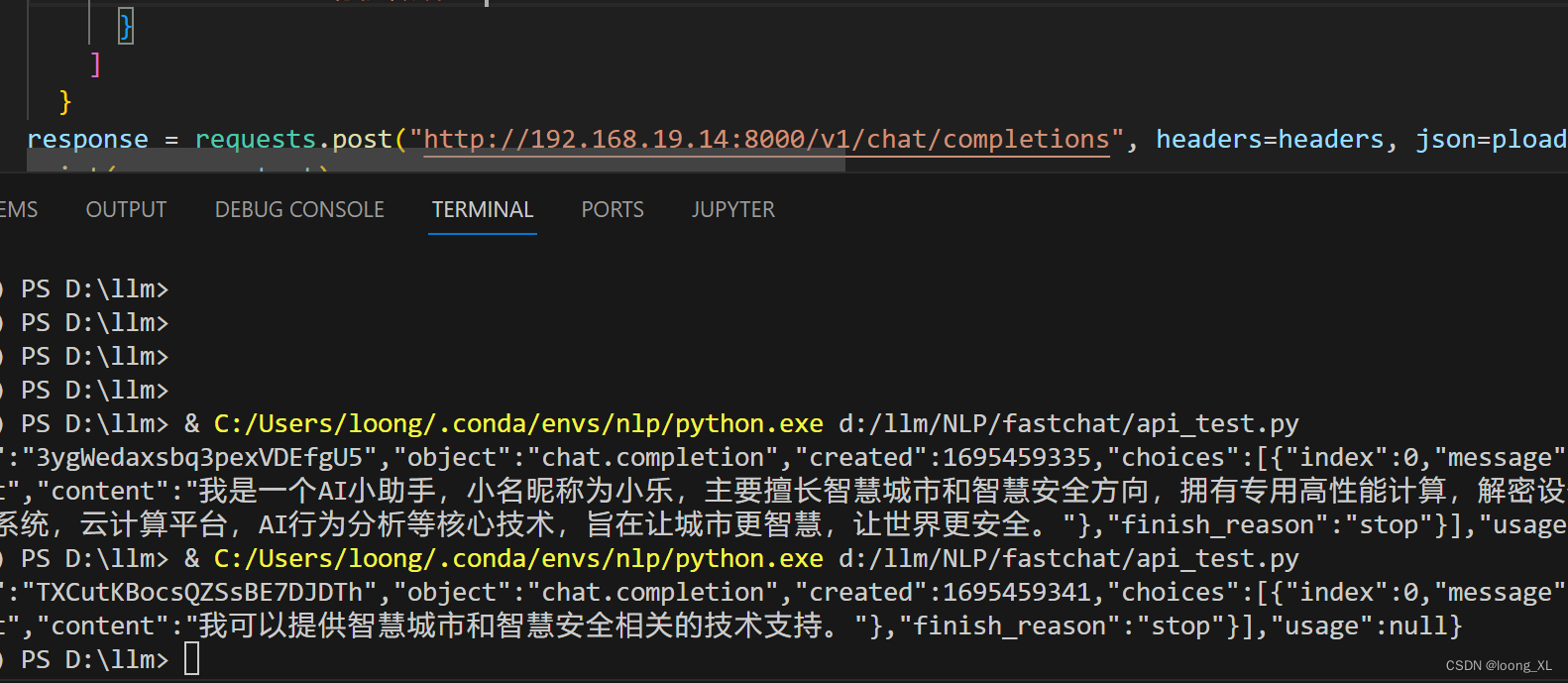

api服务

1)启动控制器

python3 -m fastchat.serve.controller

2)启动模型工作

python3 -m fastchat.serve.model_worker --model-path ./chatglm2-6b --num-gpus 2 --host=0.0.0.0 --port=21002

3)web服务启动

python3 -m fastchat.serve.api --host 0.0.0.0

4)客户端访问

import requests

import json

headers = {"Content-Type": "application/json"}

pload = {

"model": "chatglm2-6b",

"messages": [

{

"role": "system",

"content": "AI专家"

},

{

"role": "user",

"content": "你AI小助手,小名昵称为小乐,你主要擅长是智慧城***过30个字"

},

{"role": "assistant",

"content": "好的,小乐很乐意为你服务"},

{

"role": "user",

"content": "你能做啥?"

}

]

}

response = requests.post("http://192****:8000/v1/chat/completions", headers=headers, json=pload, stream=True)

print(response.text)

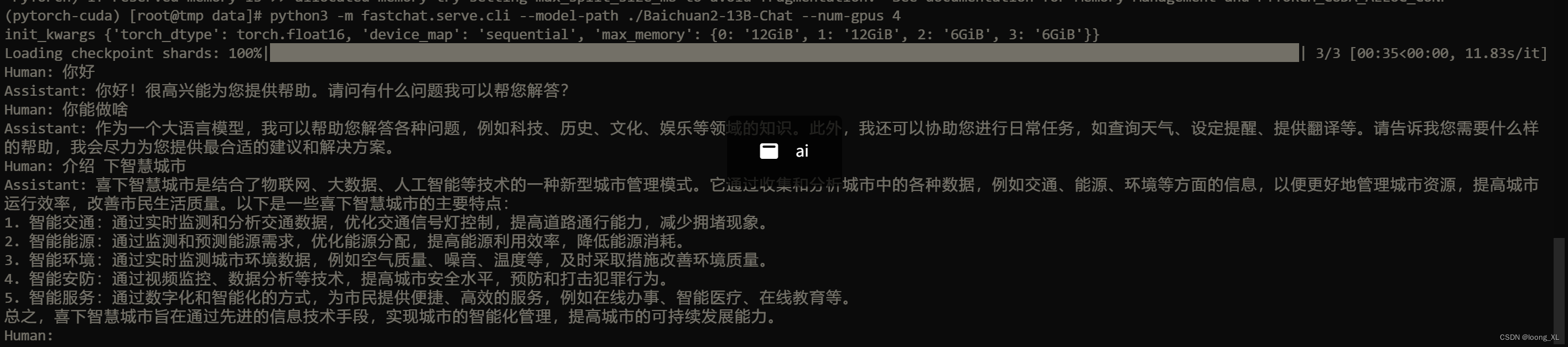

2、Baichuan2-13B-Chat测试

##运行命令:

python3 -m fastchat.serve.cli --model-path ./Baichuan2-13B-Chat --num-gpus 4

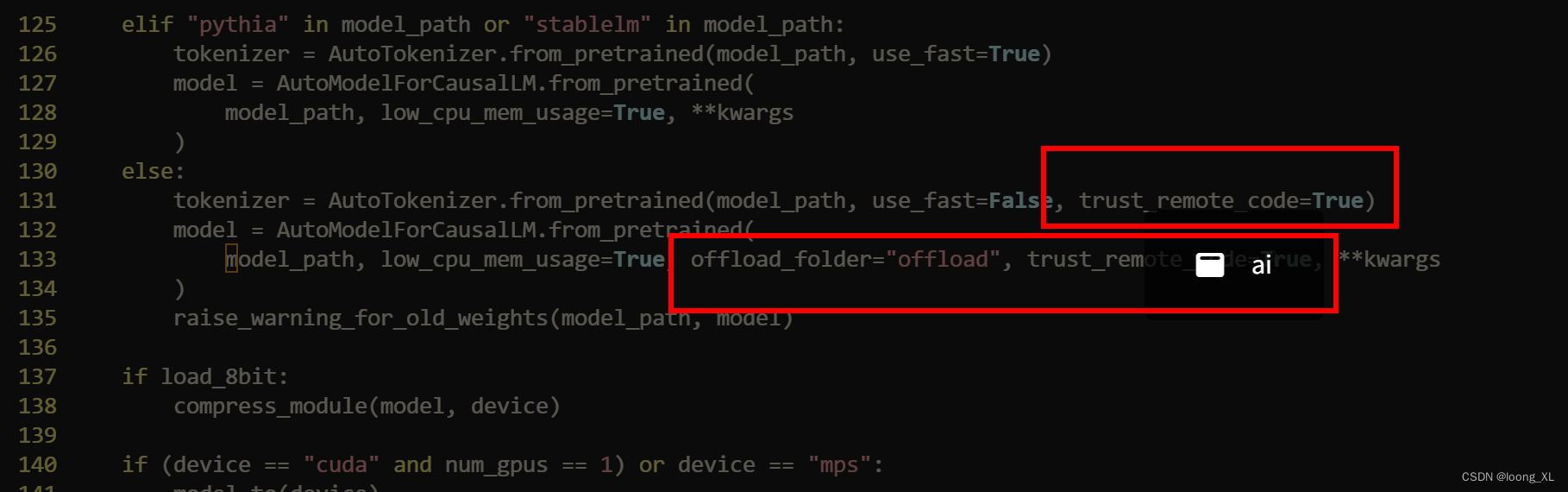

1)ValueError: Tokenizer class BaichuanTokenizer does not exist or is not currently imported. 2)offload报错,ValueError: The current device_map had weights offloaded to the disk. Please provide an offload_folder for them.也需要增加

按照报错信息需要更改:

/site-packages/fastchat/serve/inference.py文章来源:https://uudwc.com/A/rZ3ab

增加trust_remote_code=True 文章来源地址https://uudwc.com/A/rZ3ab

文章来源地址https://uudwc.com/A/rZ3ab