K8S+Jenkins+Harbor+Docker+gitlab服务器集群部署

所需资源下载地址

将此文章写给我最心爱的女孩

目录

- K8S+Jenkins+Harbor+Docker+gitlab服务器集群部署

- 1.准备以下服务器

- 2.所有服务器统一处理执行

- 2.1 关闭防火墙

- 2.2 关闭selinux

- 2.3 关闭swap(k8s禁止虚拟内存以提高性能)

- 2.4 更新yum (看需要更新)

- 2.5 时间同步

- 2.6 安装wget、vim

- 2.7 更新Docker的yum源

- 2.8 查看Docker版本

- 2.9 下载docker

- 2.10 进入/etc目录创建docker目录

- 2.11 配置国内镜像加速器

- 2.12 docker自动启动

- 2.13 启动docker

- 2.14 安装rz上传插件

- 3.k8s服务器配置

- 3.1 添加k8s的阿里云yum源

- 3.2 安装 kubeadm,kubelet 和 kubectl

- 3.3 k8s自启动

- 3.4 查询是否安装成功

- 3.5 修改三台主机名(ip自行配置)

- 3.6 设置网桥参数

- 3.7 k8s-master主节点初始化

- 3.8 部署网络插件 用于节点之间的项目通讯

- 3.9 此时k8s集群初始化完毕 休息下

- 3.10 安装kuboard图形化管理工具

- 4.配置Jenkins服务器

- 4.1 jenkins内部使用本地docker

- 4.2 配置Jenkins挂载目录

- 4.3 编写docker-compose.yml文件

- 4.4 先启动一下compose

- 4.5 配置下镜像加速

- 4.6 获取Jenkins登录密码

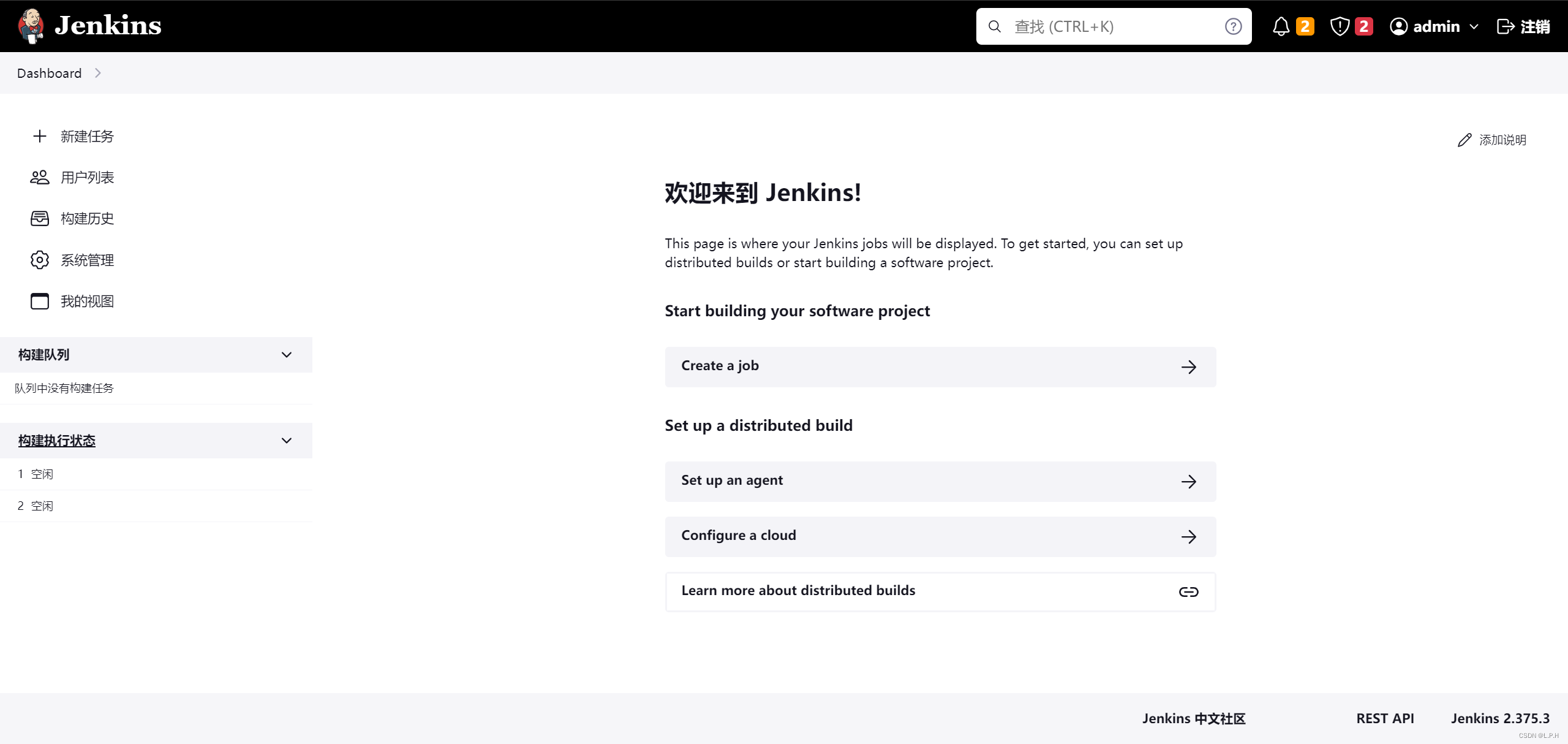

- 4.7 登录Jenkins

- 4.8 升级Jenkins

- 4.9 重新登录Jenkins获取升级版本

- 4.10 Jenkins下载以下插件

- 4.11 jenkins暂时完成

- 5. 配置Gitlab服务器

- 5.1 拉取镜像

- 5.2 创建共享卷目录

- 5.3 编写docker-compose.yml

- 5.4 启动docker compose

- 5.5 docker命令启动

- 5.6 访问界面

- 5.7 切换中文

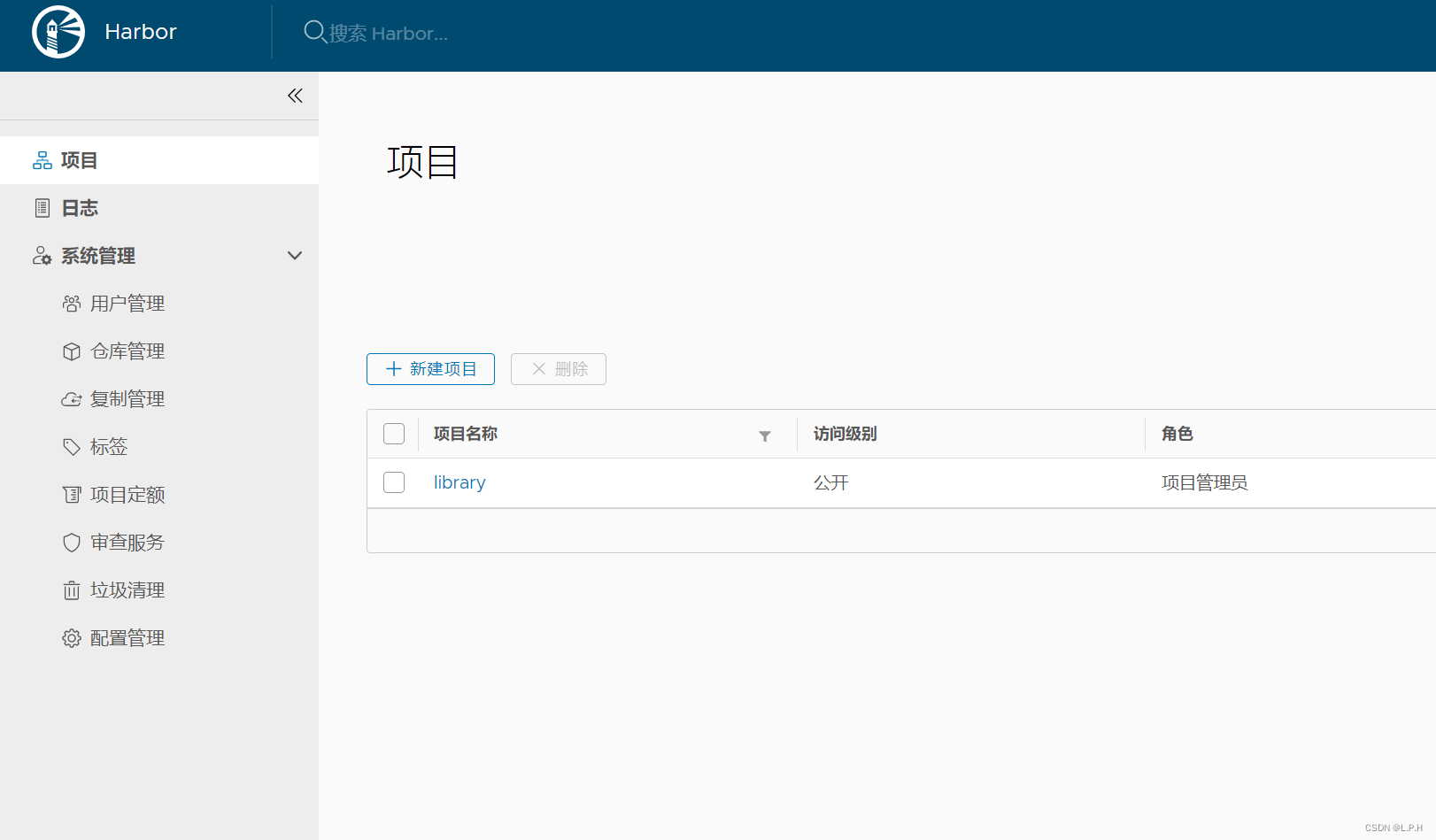

- 6. 配置Harbor镜像仓库

- 6.1 安装docker、docker-compose

- 6.2 下载Harbor安装包

- 6.3 解压安装包

- 6.4 进入解压出来的harbor文件夹中

- 6.5 Docker加载镜像

- 6.6 修改配置文件harbor.yml

- 6.7 执行**./prepare && ./install.sh**命令

- 6.8 查看相关镜像

- 6.9 访问测试

- 7.gitlab服务器连接Harbor

- 7.1 修改gitlab服务器的daemon.json

- 7.2 加载配置文件使其生效

- 7.3 重启docker

- 7.4 连接测试

- 7.5 连接成功

- 8.jenkins与gilab持续集成

- 8.1 在jenkins中创建密钥对

- 8.2 查看并复制公钥 私钥

- 8.3 将公钥添加到gitlab中

- 8.4 为Jenkins 添加全局凭据(私钥)

- 8.5 拉取代码测试

- 8.6 对 jenkins 的安全做一些设置

- 8.7 完成拉取功能

- 9.Jenkins安装Maven以及Jdk

- 9.1 首先拉取两个安装包到服务器上

- 9.2 解压Maven与Jdk并改名

- 9.3 maven并进入解压文件修改setting.xml

- 9.4 将jdk还有maven拉取到jenkins的挂载目录

- 9.5 进入jenkins容器

- 9.6 在jenkins的全局配置中配置jdk与maven

- * 9.7 jenkins服务器创建一个jar包存放目录(好像没什么用..)

- * 9.8 进入系统配置修改Publish over SSH

- 9.9 测试maven打包

- 10.创建流水线 连接K8s 实现CI/CD

- 10.0 jenkins配置k8s SSH Servers

- 10.1 创建项目,选择流水线,下面构建pipline脚本

- 10.2 写一个流水线脚本大致流程 (参考)

- 10.3 流水线语法 -- 拉取git仓库代码

- 10.4 流水线语法 --通过maven构建项目

- 10.5 流水线语法 --通过Docker制作自定义镜像

- 10.5 流水线语法 -- 将自定义镜像推送到harbor

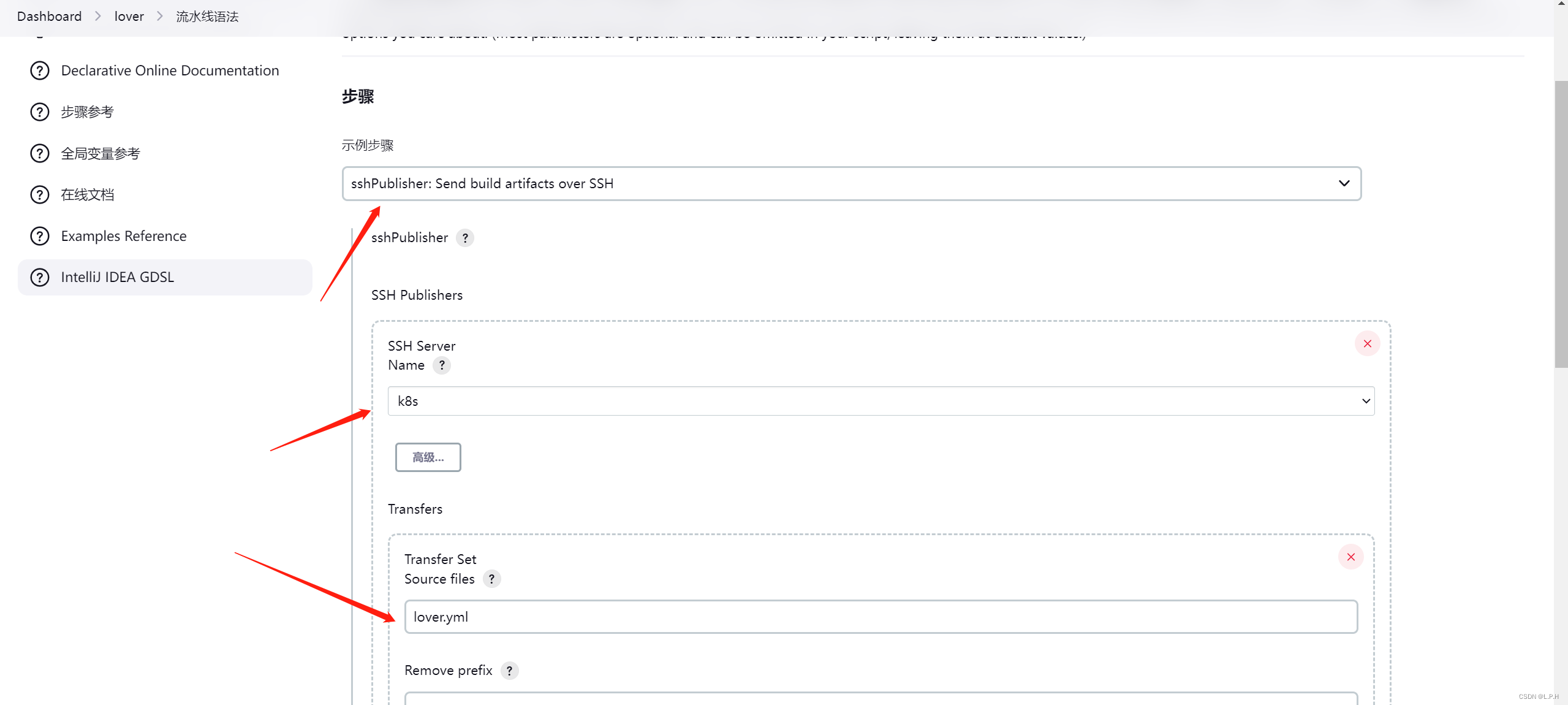

- 10.6 流水线语法 -- 将yml文件发送到master节点

- 10.7 流水线语法 -- 远程执行k8s-master的kubectl命令

- 10.8 最终流水线脚本

- 10.9 运行流水线即可完成k8s部署

- 错误

- 1.docker service ls出错

- 2.无法从harbor拉取镜像

1.准备以下服务器

centos7系统:CentOS-7-x86_64-Minimal-2009.iso

所有服务器账号root 密码:123456

vmware17虚拟机

| 服务/结点 | Ip地址 | 配置 |

|---|---|---|

| k8s-master | 192.168.85.141 | 2CPU/2核 4G内存 |

| k8s-node1 | 192.168.85.142 | 2CPU/2核 4G内存 |

| k8s-node2 | 192.168.85.143 | 2CPU/2核 4G内存 |

| Jenkins | 192.168.85.144 | 2CPU/2核 8G内存 |

| Gitlab | 192.168.85.145 | 2CPU/2核 8G内存 |

| Harbor | 192.168.85.146 | 2CPU/2核 8G内存 |

2.所有服务器统一处理执行

2.1 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

2.2 关闭selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config #永久

setenforce 0 #临时

2.3 关闭swap(k8s禁止虚拟内存以提高性能)

sed -ri 's/.*swap.*/#&/' /etc/fstab #永久

swapoff -a #临时

2.4 更新yum (看需要更新)

yum -y update

2.5 时间同步

yum install ntpdate -y #若是没有这个工具的需要下载

ntpdate time.windows.com

2.6 安装wget、vim

yum install wget -y

yum install vim -y

2.7 更新Docker的yum源

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

2.8 查看Docker版本

yum list docker-ce --showduplicates|sort -r

2.9 下载docker

yum install docker-ce-20.10.9 -y

2.10 进入/etc目录创建docker目录

cd /etc

mkdir docker

2.11 配置国内镜像加速器

sudo vim /etc/docker/daemon.json

{

"registry-mirrors" : ["https://q5bf287q.mirror.aliyuncs.com", "https://registry.docker-cn.com","http://hub-mirror.c.163.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

2.12 docker自动启动

systemctl enable docker.service

2.13 启动docker

sudo systemctl daemon-reload

service docker start

2.14 安装rz上传插件

yum install lrzsz

3.k8s服务器配置

3.1 添加k8s的阿里云yum源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

3.2 安装 kubeadm,kubelet 和 kubectl

yum install kubelet-1.23.6 kubeadm-1.23.6 kubectl-1.23.6 -y

3.3 k8s自启动

systemctl enable kubelet.service

3.4 查询是否安装成功

yum list installed | grep kubelet

yum list installed | grep kubeadm

yum list installed | grep kubectl

3.5 修改三台主机名(ip自行配置)

cat >> /etc/hosts << EOF

192.168.85.141 k8s-master

192.168.85.142 k8s-node1

192.168.85.143 k8s-node2

EOF

3.6 设置网桥参数

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system #生效

3.7 k8s-master主节点初始化

kubeadm init --apiserver-advertise-address=192.168.85.141 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.23.6 --service-cidr=10.96.0.0/16 --pod-network-cidr=10.244.0.0/16

运行结果

[root@localhost etc]# kubeadm init --apiserver-advertise-address=192.168.85.141 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.23.6 --service-cidr=10.96.0.0/16 --pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: v1.23.6

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local localhost.localdomain] and IPs [10.96.0.1 192.168.85.141]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost localhost.localdomain] and IPs [192.168.85.141 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost localhost.localdomain] and IPs [192.168.85.141 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 10.519327 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.23" in namespace kube-system with the configuration for the kubelets in the cluster

NOTE: The "kubelet-config-1.23" naming of the kubelet ConfigMap is deprecated. Once the UnversionedKubeletConfigMap feature gate graduates to Beta the default name will become just "kubelet-config". Kubeadm upgrade will handle this transition transparently.

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node localhost.localdomain as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node localhost.localdomain as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 2pw233.jhsl6y1ysijtafc7

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.85.141:6443 --token 2pw233.jhsl6y1ysijtafc7 \

--discovery-token-ca-cert-hash sha256:230fdb6f83dd9e81ff421e0be7d681de6df630501395c51e6376c76ca2df81ee

根据结果提示在master主节点上执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

查询节点是否成功

[root@localhost etc]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 107s v1.23.6

提示:

如果没有显示k8s-master而是显示localhost.localdomain

那么就执行:

kubeadm reset然后重新初始化操作!!

在node节点上执行添加操作

kubeadm join 192.168.85.141:6443 --token j4e0j9.e0ixp8ythubqguo0 \

--discovery-token-ca-cert-hash sha256:27eb6ef95e8c8ecbef3913c7a0a6921ce3dcbaf10d6534d33ef102e26c984e3e

成功添加显示

[root@localhost etc]# kubeadm join 192.168.85.141:6443 --token 2pw233.jhsl6y1ysijtafc7 \

> --discovery-token-ca-cert-hash sha256:230fdb6f83dd9e81ff421e0be7d681de6df630501395c51e6376c76ca2df81ee

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

主节点执行

[root@localhost etc]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master Ready control-plane,master 6m22s v1.23.6 k8s-node1 Ready <none> 22s v1.23.6 k8s-node2 Ready <none> 18s v1.23.6如果没有显示node节点的话

就在node节点上执行

kubeadm reset然后重新kubeadm join操作

如果node节点无法使用kubectl get nodes,那么就在node节点执行以下命令

mkdir ~/.kube

vim ~/.kube/config

# 复制master节点的内容 cat ~/.kube/config

# 将内容添加到node节点刚才创建的config里面即可!!!

3.8 部署网络插件 用于节点之间的项目通讯

(在master上操作)

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

有时候可能会下载失败

[root@k8s-master docker]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

--2023-01-05 16:28:08-- https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

正在解析主机 raw.githubusercontent.com (raw.githubusercontent.com)... 0.0.0.0, ::

正在连接 raw.githubusercontent.com (raw.githubusercontent.com)|0.0.0.0|:443... 失败:拒绝连接。

正在连接 raw.githubusercontent.com (raw.githubusercontent.com)|::|:443... 失败:拒绝连接。

采用浏览器打开复制,添加kube-flannel.yml文件即可

---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

- apiGroups:

- "networking.k8s.io"

resources:

- clustercidrs

verbs:

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

image: docker.io/flannel/flannel-cni-plugin:v1.1.2

#image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.2

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

image: docker.io/flannel/flannel:v0.20.2

#image: docker.io/rancher/mirrored-flannelcni-flannel:v0.20.2

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: docker.io/flannel/flannel:v0.20.2

#image: docker.io/rancher/mirrored-flannelcni-flannel:v0.20.2

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

将该文件放在master上,然后应用执行

kubectl apply -f kube-flannel.yml

运行结果

[root@localhost home]# ls

kube-flannel.yml

[root@localhost home]# kubectl apply -f kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

[root@localhost home]#

3.9 此时k8s集群初始化完毕 休息下

3.10 安装kuboard图形化管理工具

在master节点上执行

kubectl apply -f https://addons.kuboard.cn/kuboard/kuboard-v3.yaml

查询是否安装完毕

kubectl get pods -n kuboard

[root@localhost etc]# kubectl get pods -n kuboard

NAME READY STATUS RESTARTS AGE

kuboard-etcd-lkxmn 0/1 ContainerCreating 0 30s

kuboard-v3-56b4b954c9-jl6qw 0/1 ContainerCreating 0 30s

[root@localhost etc]# kubectl get pods -n kuboard

NAME READY STATUS RESTARTS AGE

kuboard-etcd-lkxmn 0/1 ContainerCreating 0 31s

kuboard-v3-56b4b954c9-jl6qw 0/1 ContainerCreating 0 31s

全部加载完毕之后,访问 http://192.168.85.141:30080

因为默认30080端口

账号:admin 密码:Kuboard123

4.配置Jenkins服务器

采用Docker compose安装,最开始安装docker的时候,docker compose会伴随安装

4.1 jenkins内部使用本地docker

进入/var/run,找到docker.sock

[root@npy run]# ls

auditd.pid containerd cryptsetup dmeventd-client docker.pid initramfs lvm netreport sepermit sudo tmpfiles.d user

chrony crond.pid dbus dmeventd-server docker.sock lock lvmetad.pid NetworkManager setrans syslogd.pid tuned utmp

console cron.reboot dhclient-ens33.pid docker faillock log mount plymouth sshd.pid systemd udev xtables.lock

[root@npy run]# pwd

/var/run

修改docker.sock文件所属组

chown root:root docker.sock

修改权限

chmod o+rw docker.sock

4.2 配置Jenkins挂载目录

mkdir -p /home/jenkins/jenkins_mount

chmod 777 /home/jenkins/jenkins_mount

4.3 编写docker-compose.yml文件

浏览器访问端口配置为:10240

容器名称为:npy_jenkins

version: '3.1'

services:

jenkins:

image: jenkins/jenkins

privileged: true

user: root

ports:

- 10240:8080

- 10241:50000

container_name: npy_jenkins

volumes:

- /home/jenkins/jenkins_mount:/var/jenkins_home

- /etc/localtime:/etc/localtime

- /var/run/docker.sock:/var/run/docker.sock

- /usr/bin/docker:/usr/bin/docker

- /etc/docker/daemon.json:/etc/docker/daemon.json

4.4 先启动一下compose

docker compose up -d

4.5 配置下镜像加速

# 修改挂载目录的hudson.model.UpdateCenter.xml文件 添加清华源加速

[root@localhost jenkins_mount]# pwd

/home/jenkins/jenkins_mount

[root@localhost jenkins_mount]# ls

config.xml docker-compose.yml hudson.model.UpdateCenter.xml jenkins.telemetry.Correlator.xml nodeMonitors.xml plugins secret.key.not-so-secret updates users

copy_reference_file.log failed-boot-attempts.txt identity.key.enc jobs nodes secret.key secrets userContent war

[root@localhost jenkins_mount]#

<?xml version='1.1' encoding='UTF-8'?>

<sites>

<site>

<id>default</id>

<url>https://mirrors.tuna.tsinghua.edu.cn/jenkins/updates/update-center.json</url>

</site>

</sites>

4.6 获取Jenkins登录密码

cat /home/jenkins/jenkins_mount/secrets/initialAdminPassword

[root@localhost jenkins_mount]# cat /home/jenkins/jenkins_mount/secrets/initialAdminPassword

296fcfb8b0a04a4b8716e33b53fa6743

4.7 登录Jenkins

选择安装推荐的插件就可以了

http://192.168.85.144:10240

4.8 升级Jenkins

首先下载jenkins的war包

下载地址:2.375.3版本

然后停止jenkins容器,记得是停止!

# docker stop 677c9d9ab474

[root@localhost jenkins_mount]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

677c9d9ab474 jenkins/jenkins "/sbin/tini -- /usr/…" 27 minutes ago Up 27 minutes 0.0.0.0:10240->8080/tcp, :::10240->8080/tcp, 0.0.0.0:10241->50000/tcp, :::10241->50000/tcp npy_jenkins

[root@localhost jenkins_mount]# docker stop 677c9d9ab474

f1c11a1c592b

[root@localhost jenkins_mou

然后把下载的jenkins.war包通过rz拉到服务器上,然后执行docker cp命令

[root@localhost jenkins]# ls

jenkins_mount

[root@localhost jenkins]# rz

[root@localhost jenkins]# ls

jenkins_mount jenkins.war

[root@localhost jenkins]# docker cp jenkins.war 677c9d9ab474:/usr/share/jenkins/jenkins.war

Preparing to copy...

Copying to container - 0B

Copying to container - 0B

Copying to container - 512B

Copying to container - 32.77kB

Copying to container - 65.54kB

Copying to container - 98.3kB

Copying to container - 131.1kB

再重新启动容器

docker start 677c9d9ab474

4.9 重新登录Jenkins获取升级版本

账号是:admin 密码是:123456

4.10 Jenkins下载以下插件

第一个是为了连接gitlab,第二个是为了ssh连接目标服务器

Git Parameter

Publish Over SSH

4.11 jenkins暂时完成

5. 配置Gitlab服务器

5.1 拉取镜像

docker pull gitlab/gitlab-ce

5.2 创建共享卷目录

[root@localhost gitlab]# mkdir etc

[root@localhost gitlab]# mkdir log

[root@localhost gitlab]# mkdir data

[root@localhost gitlab]# ls

data etc log

[root@localhost gitlab]# chmod 777 data/ etc/ log/

5.3 编写docker-compose.yml

version: '3.1'

services:

gitlab:

image: 'gitlab/gitlab-ce'

restart: always

container_name: npy_gitlab

privileged: true

environment:

GITLAB_OMNIBUS_CONFIG: |

external_url 'http://192.168.85.145'

ports:

- '80:80'

- '443:443'

- '33:22'

volumes:

- '/home/gitlab/etc:/etc/gitlab'

- '/home/gitlab/log:/var/log/gitlab'

- '/home/gitlab/data:/var/opt/gitlab'

5.4 启动docker compose

docker compose up -d

5.5 docker命令启动

docker run -itd --name=npy_gitlab --restart=always --privileged=true -p 8443:443 -p 80:80 -p 222:22 -v /home/gitlab/etc:/etc/gitlab -v /home/gitlab/log:/var/log/gitlab -v /home/gitlab/data:/var/opt/gitlab gitlab/gitlab-ce

5.6 访问界面

http://192.168.85.145:80

用户名为:root

获取密码:进入容器查看

sudo docker exec -it gitlab grep 'Password:' /etc/gitlab/initial_root_password

5.7 切换中文

6. 配置Harbor镜像仓库

6.1 安装docker、docker-compose

yum install docker-ce-20.10.9 -y

yum install epel-release -y

yum install docker-compose –y

6.2 下载Harbor安装包

下载地址

下载会有点慢,建议用迅雷下载

下载的harbor-offline-installer-v1.10.10.tgz 包通过ftp工具拉取到服务器上

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-qudEZWti-1676864296334)(E:\MD笔记\MyLoverK8s\images\image-20230216233843950.png)]](https://img-blog.csdnimg.cn/d86e83585c5e4a4db88ce109ebf85de0.png)

6.3 解压安装包

[root@localhost harbor]# tar -zxvf harbor-offline-installer-v1.10.10.tgz

harbor/harbor.v1.10.10.tar.gz

harbor/prepare

harbor/LICENSE

harbor/install.sh

harbor/common.sh

harbor/harbor.yml

[root@localhost harbor]#

6.4 进入解压出来的harbor文件夹中

[root@localhost harbor]# ls

harbor harbor-offline-installer-v1.10.10.tgz

[root@localhost harbor]# cd harbor

[root@localhost harbor]# ls

common.sh harbor.v1.10.10.tar.gz harbor.yml install.sh LICENSE prepare

[root@localhost harbor]#

6.5 Docker加载镜像

docker load -i harbor.v1.10.10.tar.gz

6.6 修改配置文件harbor.yml

[root@localhost harbor]# cd harbor

[root@localhost harbor]# ls

common.sh harbor.v1.10.10.tar.gz harbor.yml install.sh LICENSE prepare

[root@localhost harbor]# vim harbor.yml

设置hostname为主机ip:192.168.85.146

密码为Harbor12345

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-ue6uEZ57-1676864296334)(E:\MD笔记\MyLoverK8s\images\image-20230216234256567.png)]](https://img-blog.csdnimg.cn/6245db9358a44c5cbb2ad0c084f7821b.png)

将上面的https:内容都进行注释,否则会出错:ERROR:root:Error: The protocol is https but attribute ssl_cert is not set

6.7 执行**./prepare && ./install.sh**命令

./prepare

./install.sh

如果docker-compose版本不够,就去下载最新版本

curl -L https://get.daocloud.io/docker/compose/releases/download/v2.4.1/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose sudo chmod +x /usr/local/bin/docker-compose sudo ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose

6.8 查看相关镜像

[root@localhost harbor]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1c2c49bcae29 goharbor/nginx-photon:v1.10.10 "nginx -g 'daemon of…" 47 seconds ago Up 45 seconds (healthy) 0.0.0.0:80->8080/tcp, :::80->8080/tcp nginx

bce07bf48d57 goharbor/harbor-jobservice:v1.10.10 "/harbor/harbor_jobs…" 47 seconds ago Up 45 seconds (healthy) harbor-jobservice

43a696210739 goharbor/harbor-core:v1.10.10 "/harbor/harbor_core" 48 seconds ago Up 46 seconds (healthy) harbor-core

2c460dcf924f goharbor/registry-photon:v1.10.10 "/home/harbor/entryp…" 50 seconds ago Up 47 seconds (healthy) 5000/tcp registry

8904cb8b36e2 goharbor/redis-photon:v1.10.10 "redis-server /etc/r…" 50 seconds ago Up 48 seconds (healthy) 6379/tcp redis

9b030e10048b goharbor/harbor-registryctl:v1.10.10 "/home/harbor/start.…" 50 seconds ago Up 48 seconds (healthy) registryctl

7613eb27e887 goharbor/harbor-db:v1.10.10 "/docker-entrypoint.…" 50 seconds ago Up 48 seconds (healthy) 5432/tcp harbor-db

ab698202e684 goharbor/harbor-portal:v1.10.10 "nginx -g 'daemon of…" 50 seconds ago Up 47 seconds (healthy) 8080/tcp harbor-portal

62496f80be73 goharbor/harbor-log:v1.10.10 "/bin/sh -c /usr/loc…" 52 seconds ago Up 49 seconds (healthy) 127.0.0.1:1514->10514/tcp harbor-log

[root@localhost harbor]#

6.9 访问测试

浏览器输入ip即可

7.gitlab服务器连接Harbor

7.1 修改gitlab服务器的daemon.json

目的:将CI服务器上的项目push到镜像harbor私仓

insecure-registries为harbor服务器的ip

vim /etc/docker/daemon.json

{

"registry-mirrors" : ["https://q5bf287q.mirror.aliyuncs.com", "https://registry.docker-cn.com","http://hub-mirror.c.163.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"insecure-registries": ["192.168.85.146"]

}

7.2 加载配置文件使其生效

systemctl daemon-reload

7.3 重启docker

systemctl restart docker

7.4 连接测试

测试登录harbor服务器

账号密码就是部署harbor时的账号密码:admin Harbor12345

[root@localhost docker]# docker login 192.168.85.146

Username: admin

Password: Harbor12345

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@localhost docker]#

7.5 连接成功

8.jenkins与gilab持续集成

8.1 在jenkins中创建密钥对

需要进入jenkins容器

docker exec -it b5a49147b7f5 bash

# 创建密钥对,一路默认回车

ssh-keygen

8.2 查看并复制公钥 私钥

cat ~/.ssh/id_rsa.pub

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDBHrS0octCBPQYrNt3UAGdhxunMdBkmLBa3DATy0ND1QoRMbBaiZ6XYEMUaYEmiitMbXfzCHN8tEyUpqqxNDhjQ0kq0FlXRpUV65BWzvpWNM7cOG1yH1OE1JqGQ7HRyArKOK6JcCoFRn+F0LWO01GcTGbiF8z//ZeQo11GpDDXC2cQovDxIJTNS5y9BLRMZzU3XZNJRJvKVcmIINaX+Xiz49NfPauswa2aZV+cOkJetnqpMk6LkbDv+4FZ15lqQzSVpSTslbiZAp1t6TSLhoim8KubmFa9C7vP1lIqZtEJqPawJ7o9hZ8guo1O07SQPu6We7gNX0IccRLG0DNO/JPh root@localhost.localdomain

cat ~/.ssh/id_rsa

-----BEGIN OPENSSH PRIVATE KEY-----

b3BlbnNzaC1rZXktdjEAAAAABG5vbmUAAAAEbm9uZQAAAAAAAAABAAABlwAAAAdzc2gtcn

NhAAAAAwEAAQAAAYEA2y/A53dGESZbz40mKb6tKjsBBdv/BzH7gkEhz3hpKXF6dSufP1Gj

f+bhei7kpPUFC1Vq0b3IWKkkF1+AqL9KMEWq38sDoiL72gnWvGIeclx9gCeKKR+runMGxs

aIJG12cTV3uEqAmVq4GN84qLS+d7pEBcJyVvq8rl1bqzexBeKipcRnrMC916fFDYIAP98y

RPUfE4TAMHUIh4FL4K0yWDr2IvbSs8J0ks/sY5IFc0ZXwWpsHd7rkRC8vd+/Z9QrSmUw6T

+Ci6RL11O2+rpSOHoXZcBbL6e+sOpKQTDDePYbLzbIL6jfXK4adv6WelMP5Ngs2WG8oCC1

/d9hMlm6alpom2gtdgEIireENkX6MWO7yq921oDC5tYq8rdUXpk8vkjhCHbGkqJrzPTqC3

raBV5SYMUwpUzRvsIaas4ZJjB4rLzNOZ/DCdSvYajSXGEkhTFKduDqEGd+TpOVEsJaDz7A

153HZc11vvuYr3ojVsycTWQAWlnGIipa7rj447NRAAAFiNfKu2DXyrtgAAAAB3NzaC1yc2

EAAAGBANsvwOd3RhEmW8+NJim+rSo7AQXb/wcx+4JBIc94aSlxenUrnz9Ro3/m4Xou5KT1

BQtVatG9yFipJBdfgKi/SjBFqt/LA6Ii+9oJ1rxiHnJcfYAniikfq7pzBsbGiCRtdnE1d7

hKgJlauBjfOKi0vne6RAXCclb6vK5dW6s3sQXioqXEZ6zAvdenxQ2CAD/fMkT1HxOEwDB1

CIeBS+CtMlg69iL20rPCdJLP7GOSBXNGV8FqbB3e65EQvL3fv2fUK0plMOk/goukS9dTtv

q6Ujh6F2XAWy+nvrDqSkEww3j2Gy82yC+o31yuGnb+lnpTD+TYLNlhvKAgtf3fYTJZumpa

aJtoLXYBCIq3hDZF+jFju8qvdtaAwubWKvK3VF6ZPL5I4Qh2xpKia8z06gt62gVeUmDFMK

VM0b7CGmrOGSYweKy8zTmfwwnUr2Go0lxhJIUxSnbg6hBnfk6TlRLCWg8+wNedx2XNdb77

mK96I1bMnE1kAFpZxiIqWu64+OOzUQAAAAMBAAEAAAGAEZT2C1sk8rE6Ah8XZZfW+iE7hs

XL4j7fJuakmKjW/q0MnqN+Ja0dyV+yzINAcf75hZw3clWf4YTH0VwmzOJzSAX+m+8D/piB

zU6mu/u+53uF0abaTUwuEUmyzHUWbJ2fN5uLW+wV/rcpN02IlPfSo3X8iN29ID8CrZXtiY

FxIMC6PUPQ8SmQ0OCzTM8VyAnWVXO4J2+pnvl0UrJLbN1XwX4RSmK0Khk6EqC9HIuVBlcp

KOmpfIfqK3vFOBHfn6uEG2IeNWYoabBvaXiJkZpp0yZKWV7IHectdssFcDRkFI+pzzZC5I

pea2VpSWoLMDd8qhyPZhV6F04hltQwDytBNvHXs3fYLu9J91qcC2JvsoEMTZGqbDsz2VQg

Lm0+Jjp0pbhgAuCXT3OKv+wgTxcChi7nVAskaAHfUJMB4E08kNSVjNmq3mdmHQ5U4jA8u/

K938v4sJcM7RXTx2iCeh2Q+4B3AcPL/Zi2vgZ5AKU1B+eVExRkShUUyvSebdaT9MZxAAAA

wEGlkwjOILNGC9Qj7WTivh/4T2WpTZ/CxOLogyoBbMEzKxe3a+HfnvrqhU3TT5KqNgJRFz

QXXbbzGrUpJxuNe+LjfPokI4GNQHQFumxC6fgPtNCt8OOhe+L3b9F0b5aIxQvBSdLhEoyb

kO7f7WvherVndQpC4EEZiBOevzNdhswmLLN7/3LRNohb3s5Nod5TE6ryt2RjG3mh8Bq/o0

NFRavPknEfQvJwEdXN+62lT3mrW1JWa05E1RUahKVc28Od+wAAAMEA/HptIL+eu5a6DvDQ

3aiudhh/pccPB9fcaWr9L0IUBHXM/RUe2UYcH3w06RJIbsbqB3ep5QbOaE6PSCE3D3mge4

9mChFwMy4GpijAL845v3agVR+bY3VhHD0xLQvFiphnBMtSQmS7JvTEk7+XOR4PUF8big2i

4s44KG/p4tB67C0fwdrt3IvYR7G4PTYQVlHeE8kgURGdg6LIPFgWtC8VSeSfz2PETSfyqo

/odIupNJdHV5qvpIY0R9kiWD0M2zp1AAAAwQDePnIwymfJ+SkWvgJNYI6AAGsDjxDPK1Gm

lC5IVc/KzgH9uuffv0nyQi1LJLce2OZuoQ8SsxBEkZWL01ptH3xv4sqZisO/nJGp5gLkso

ZmsImhZAfExrchnstivvXAJ3UmYDTd5xWxkNCX4Zom+3ciNBMAFiZq4Abj4TDO78tqBV/D

O30W8O1qHpXq+t6mAY0f8cIzdSLC4fwWBEbfhdLREPYecSYjka0FQ/NHuoh+aRkH1Ivan5

FEQwgQOeYfoe0AAAARcm9vdEBiNWE0OTE0N2I3ZjUBAg==

-----END OPENSSH PRIVATE KEY-----

8.3 将公钥添加到gitlab中

登录gilab,点击用户设置界面,执行一下步骤,增加一个ssh密钥.

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-yMCzRmpm-1676864296335)(E:\MD笔记\MyLoverK8s\images\image-20230217010623913.png)]](https://img-blog.csdnimg.cn/f290bc0c43284cd09c5d92b4a0dbd19c.png)

8.4 为Jenkins 添加全局凭据(私钥)

登录jenkins,打开系统管理-Manage Credentials进入凭据管理页面

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-pLDo2FKu-1676864296335)(E:\MD笔记\MyLoverK8s\images\image-20230217011839014.png)]](https://img-blog.csdnimg.cn/4a798501dd094a5f9db9e2b67bdd4620.png)

8.5 拉取代码测试

- 先去在gitlab中创建一个项目 并上传项目 注意分支一定要是master分支

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-k8jtAs7M-1676864296335)(E:\MD笔记\MyLoverK8s\images\image-20230217012105449.png)]](https://img-blog.csdnimg.cn/806e0d05ab8944ddb2535080d7b0162e.png)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-ixrJydPL-1676864296335)(E:\MD笔记\MyLoverK8s\images\image-20230217025841875.png)]](https://img-blog.csdnimg.cn/cd19c82e7c0745199abb2be1574d64cf.png)

复制项目地址

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-VmAsbR9g-1676864296335)(E:\MD笔记\MyLoverK8s\images\image-20230217011937496.png)]](https://img-blog.csdnimg.cn/7786424b927e40a99223918fd747fe5f.png)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-BDXcQuzO-1676864296336)(E:\MD笔记\MyLoverK8s\images\image-20230217033613942.png)]](https://img-blog.csdnimg.cn/324cb15ff8894004b2bda385af9d21ad.png)

设置完之后进行构建 输出成功

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-4uNF4zon-1676864296336)(E:\MD笔记\MyLoverK8s\images\image-20230217033647087.png)]](https://img-blog.csdnimg.cn/7f1be4bd0dcd481f812c7c6df1353126.png)

查看工作空间,发现有代码了

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-0IRzqjX2-1676864296336)(E:\MD笔记\MyLoverK8s\images\image-20230217033717474.png)]](https://img-blog.csdnimg.cn/ddabba142a884979b8a05ff65e57e794.png)

8.6 对 jenkins 的安全做一些设置

依次点击 系统管理-全局安全配置-授权策略,勾选"匿名用户具有可读权限

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-aIIFvBsv-1676864296336)(E:\MD笔记\MyLoverK8s\images\image-20230217031659730.png)]](https://img-blog.csdnimg.cn/1028887590e24e35a23dda1e7298fdc7.png)

8.7 完成拉取功能

9.Jenkins安装Maven以及Jdk

9.1 首先拉取两个安装包到服务器上

[root@localhost jenkins]# rz

[root@localhost jenkins]# ls

apache-maven-3.9.0-bin.tar.gz docker-compose.yml jdk-8u11-linux-x64.tar.gz jenkins_mount jenkins.war

9.2 解压Maven与Jdk并改名

[root@localhost jenkins]# ls

apache-maven-3.9.0 apache-maven-3.9.0-bin.tar.gz docker-compose.yml jdk1.8.0_11 jdk-8u11-linux-x64.tar.gz jenkins_mount jenkins.war

[root@localhost jenkins]# mv apache-maven-3.9.0 maven

[root@localhost jenkins]# ls

apache-maven-3.9.0-bin.tar.gz docker-compose.yml jdk1.8.0_11 jdk-8u11-linux-x64.tar.gz jenkins_mount jenkins.war maven

[root@localhost jenkins]# mv jdk1.8.0_11/ jdk

[root@localhost jenkins]# ls

apache-maven-3.9.0-bin.tar.gz docker-compose.yml jdk jdk-8u11-linux-x64.tar.gz jenkins_mount jenkins.war maven

[root@localhost jenkins]#

9.3 maven并进入解压文件修改setting.xml

位置:home/jenkins/maven/conf/setting.xml

- 添加阿里云镜像地址

</mirrors>

<mirror>

<id>alimaven</id>

<name>aliyun maven</name>

<url>https://maven.aliyun.com/repository/public/</url>

<mirrorOf>central</mirrorOf>

</mirror>

</mirrors>

- 添加jdk8编译

</profiles>

<profile>

<id>jdk8</id>

<activation>

<activeByDefault>true</activeByDefault>

<jdk>1.8</jdk>

</activation>

<properties>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<maven.compiler.compilerVersion>1.8</maven.compiler.compilerVersion>

</properties>

</profile>

</profiles>

- 激活profile

<activeProfiles>

<activeProfile>jdk8</activeProfile>

</activeProfiles>

9.4 将jdk还有maven拉取到jenkins的挂载目录

[root@localhost jenkins]# ls

apache-maven-3.9.0-bin.tar.gz docker-compose.yml jdk jdk-8u11-linux-x64.tar.gz jenkins_mount jenkins.war maven

[root@localhost jenkins]# mv jdk /home/jenkins/jenkins_mount/

[root@localhost jenkins]# mv maven/ /home/jenkins/jenkins_mount/

[root@localhost jenkins]# ls

apache-maven-3.9.0-bin.tar.gz docker-compose.yml jdk-8u11-linux-x64.tar.gz jenkins_mount jenkins.war

[root@localhost jenkins]#

9.5 进入jenkins容器

docker exec -it b5a49147b7f5 bash

找到jdk与maven的目录

/var/jenkins_home/jdk

/var/jenkins_home/maven

root@b5a49147b7f5:/var/jenkins_home# cd jdk/

root@b5a49147b7f5:/var/jenkins_home/jdk# ls

COPYRIGHT LICENSE README.html THIRDPARTYLICENSEREADME-JAVAFX.txt THIRDPARTYLICENSEREADME.txt bin db include javafx-src.zip jre lib man release src.zip

root@b5a49147b7f5:/var/jenkins_home/jdk# pwd

/var/jenkins_home/jdk

root@b5a49147b7f5:/var/jenkins_home# cd maven/

root@b5a49147b7f5:/var/jenkins_home/maven# ls

LICENSE NOTICE README.txt bin boot conf lib

root@b5a49147b7f5:/var/jenkins_home/maven# pwd

/var/jenkins_home/maven

root@b5a49147b7f5:/var/jenkins_home/maven#

9.6 在jenkins的全局配置中配置jdk与maven

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-CdQIXTVH-1676864296336)(E:\MD笔记\MyLoverK8s\images\image-20230217112448104.png)]](https://img-blog.csdnimg.cn/cf8757c6b084405d92a289811b59bb20.png)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-1bCQ3Gks-1676864296336)(E:\MD笔记\MyLoverK8s\images\image-20230217035409892.png)]](https://img-blog.csdnimg.cn/1c41da6f255840f09460c021b2d14608.png)

* 9.7 jenkins服务器创建一个jar包存放目录(好像没什么用…)

[root@localhost jenkins]# mkdir MavenJar

[root@localhost jenkins]# chmod 777 -R MavenJar/

[root@localhost jenkins]# ll

总用量 256152

-rw-r--r--. 1 root root 9024147 2月 14 16:44 apache-maven-3.9.0-bin.tar.gz

-rw-r--r--. 1 root root 433 2月 16 23:57 docker-compose.yml

-rw-r--r--. 1 root root 159019376 9月 27 2021 jdk-8u11-linux-x64.tar.gz

drwxrwxrwx. 20 root root 4096 2月 17 03:56 jenkins_mount

-rw-r--r--. 1 root root 94238599 2月 12 15:32 jenkins.war

drwxrwxrwx. 2 root root 6 2月 17 03:56 MavenJar

[root@localhost jenkins]#

* 9.8 进入系统配置修改Publish over SSH

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-WXaCxcxy-1676864296337)(E:\MD笔记\MyLoverK8s\images\image-20230217035919710.png)]](https://img-blog.csdnimg.cn/21c32065567741498f24d80b56c48cd4.png)

9.9 测试maven打包

在Job的构建里面Build Steps->调用顶层Maven目标

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-3m7siA6K-1676864296337)(E:\MD笔记\MyLoverK8s\images\image-20230217040233773.png)]](https://img-blog.csdnimg.cn/f21a4706f02d4542aed36f63e2980e61.png)

clean package -DiskpTest

应用保存之后重新构建,就会执行打包操作,第一次打包下载会有点慢,不要急哦。

10.创建流水线 连接K8s 实现CI/CD

10.0 jenkins配置k8s SSH Servers

在系统配置里面

记得在k8s master上创建 /usr/local/k8s目录 并且 chmod 777 k8s

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-afPduR56-1676864296337)(E:\MD笔记\MyLoverK8s\images\image-20230217131428368.png)]](https://img-blog.csdnimg.cn/8e91cefbd2ac48f68777a7d5f534021f.png)

10.1 创建项目,选择流水线,下面构建pipline脚本

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-WWRfkTNI-1676864296337)(E:\MD笔记\MyLoverK8s\images\image-20230217132050419.png)]](https://img-blog.csdnimg.cn/fd053f64013e41dcab78133af1608453.png)

10.2 写一个流水线脚本大致流程 (参考)

// 所有脚本命令都放在pipline中

pipeline{

// 指定任务在哪个集群节点中执行

agent any

// 声明全局变量,方便使用

environment {

key = 'value'

}

stages {

stage('拉取git仓库代码') {

steps {

echo '拉取git仓库代码 - SUCCESS'

}

}

stage('通过maven构建项目') {

steps {

echo '通过maven构建项目 - SUCCESS'

}

}

stage('通过Docker制作自定义镜像') {

steps {

echo '通过Docker制作自定义镜像 - SUCCESS'

}

}

stage('将自定义镜像推送到harbor') {

steps {

echo '将自定义镜像推送到harbor - SUCCESS'

}

}

stage('将yml文件传到k8s-master上') {

steps {

echo '将yml文件传到k8s-master上 - SUCCESS'

}

}

stage('远程执行k8s-master的kubectl命令') {

steps {

echo '远程执行k8s-master的kubectl命令 - SUCCESS'

}

}

}

}

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-PyqyxiI9-1676864296337)(E:\MD笔记\MyLoverK8s\images\image-20230217133034490.png)]](https://img-blog.csdnimg.cn/c3ca980e844d4102ba5540465281c7d9.png)

10.3 流水线语法 – 拉取git仓库代码

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-K2T8jeqr-1676864296337)(E:\MD笔记\MyLoverK8s\images\image-20230217133302353.png)]](https://img-blog.csdnimg.cn/a4bb10269d034faa8ac022bea9ff6fca.png)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-w2twjrsF-1676864296338)(E:\MD笔记\MyLoverK8s\images\image-20230217133326300.png)]](https://img-blog.csdnimg.cn/8fac11873cf94a44b8818f5f9923589c.png)

checkout([$class: 'GitSCM', branches: [[name: '*/master']], extensions: [], userRemoteConfigs: [[url: 'http://192.168.85.145/root/lover.git']]])

10.4 流水线语法 --通过maven构建项目

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-Vsoiceiu-1676864296338)(E:\MD笔记\MyLoverK8s\images\image-20230217133703468.png)]](https://img-blog.csdnimg.cn/d6c6678675204cd4989053361404fd21.png)

sh '/var/jenkins_home/maven/bin/mvn clean package -DskipTests'

10.5 流水线语法 --通过Docker制作自定义镜像

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-vl6FR6xq-1676864296338)(E:\MD笔记\MyLoverK8s\images\image-20230217134008310.png)]](https://img-blog.csdnimg.cn/782f478a43eb4f359c5c12c919912345.png)

sh '''mv ./target/*.jar ./docker/lover_story.jar

docker build -t ${JOB_NAME}:${tag} ./docker/'''

10.5 流水线语法 – 将自定义镜像推送到harbor

这个需要修改私服在jenkins服务器的/etc/docker/daemon.json

{

"registry-mirrors" : ["https://q5bf287q.mirror.aliyuncs.com", "https://registry.docker-cn.com","http://hub-mirror.c.163.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"insecure-registries": ["192.168.85.146"]

}

# 重新加载

systemctl daemon-reload

systemctl restart docker

docker swarm init

先docker登录harbor

然后给镜像打标签

之后进行推送到指定的仓库中

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-dupvVOX8-1676864296339)(E:\MD笔记\MyLoverK8s\images\image-20230217140955522.png)]](https://img-blog.csdnimg.cn/6ded779562ef4d838f9b1eff99969df8.png)

sh '''docker login -u ${harborUser} -p ${harborPassword} ${harborAddress}

docker tag ${JOB_NAME}:${tag} ${harborAddress}/${harborRepo}/${JOB_NAME}:${tag}

docker push ${harborAddress}/${harborRepo}/${JOB_NAME}:${tag}'''

10.6 流水线语法 – 将yml文件发送到master节点

- 让jenkins无密码连接到k8s

进入jenkins容器复制其公钥

root@b5a49147b7f5:~/.ssh# cat id_rsa.pub

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQDbL8Dnd0YRJlvPjSYpvq0qOwEF2/8HMfuCQSHPeGkpcXp1K58/UaN/5uF6LuSk9QULVWrRvchYqSQXX4Cov0owRarfywOiIvvaCda8Yh5yXH2AJ4opH6u6cwbGxogkbXZxNXe4SoCZWrgY3ziotL53ukQFwnJW+ryuXVurN7EF4qKlxGeswL3Xp8UNggA/3zJE9R8ThMAwdQiHgUvgrTJYOvYi9tKzwnSSz+xjkgVzRlfBamwd3uuRELy9379n1CtKZTDpP4KLpEvXU7b6ulI4ehdlwFsvp76w6kpBMMN49hsvNsgvqN9crhp2/pZ6Uw/k2CzZYbygILX932EyWbpqWmibaC12AQiKt4Q2RfoxY7vKr3bWgMLm1iryt1RemTy+SOEIdsaSomvM9OoLetoFXlJgxTClTNG+whpqzhkmMHisvM05n8MJ1K9hqNJcYSSFMUp24OoQZ35Ok5USwloPPsDXncdlzXW++5iveiNWzJxNZABaWcYiKlruuPjjs1E= root@b5a49147b7f5

root@b5a49147b7f5:~/.ssh#

- master节点配置jenkins公钥

将id_rsa.pub 内容添加进去即可

[root@k8s-master ~]# cd ~

[root@k8s-master ~]# ls

anaconda-ks.cfg

[root@k8s-master ~]# mkdir .ssh

[root@k8s-master ~]# cd .ssh/

[root@k8s-master .ssh]# ls

[root@k8s-master .ssh]# vim authorized_keys

[root@k8s-master .ssh]#

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: lover_story

name: lover

labels:

app: lover

spec:

replicas: 3

selector:

matchLabels:

app: lover

template:

metadata:

labels:

app: lover

spec:

containers:

- name: lover

image: 192.168.85.146/lover/lover:v4.0.0

imagePullPolicy: Always

ports:

- containerPort: 8082

---

apiVersion: v1

kind: Service

metadata:

namespace: test

labels:

app: lover

name: lover

spec:

selector:

app: lover

ports:

- port: 8088

targetPort: 8082

type: NodePort

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

namespace: test

name: lover

spec:

ingressClassName: ingress

rules:

- host:lph.pipline.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: lover

port:

number: 8088

sshPublisher(publishers: [sshPublisherDesc(configName: 'k8s', transfers: [sshTransfer(cleanRemote: false, excludes: '', execCommand: '', execTimeout: 120000, flatten: false, makeEmptyDirs: false, noDefaultExcludes: false, patternSeparator: '[, ]+', remoteDirectory: '', remoteDirectorySDF: false, removePrefix: '', sourceFiles: 'lover.yml')], usePromotionTimestamp: false, useWorkspaceInPromotion: false, verbose: false)])

10.7 流水线语法 – 远程执行k8s-master的kubectl命令

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-9XkVyAWq-1676864296339)(E:\MD笔记\MyLoverK8s\images\image-20230217154653954.png)]](https://img-blog.csdnimg.cn/816c3f8a3b9449d295788dc5864e50d0.png)

sh 'ssh root@192.168.85.141 kubectl apply -f lover.yml'

记得Master也要配置/etc/docker/dameon.json文件的私仓地址

10.8 最终流水线脚本

// 所有脚本命令都放在pipline中

pipeline{

// 指定任务在哪个集群节点中执行

agent any

// 声明全局变量,方便使用

environment {

harborUser = 'admin'

harborPassword = 'Harbor12345'

harborAddress = '192.168.85.146'

harborRepo = 'lover'

}

stages {

stage('拉取git仓库代码') {

steps {

checkout([$class: 'GitSCM', branches: [[name: '*/master']], extensions: [], userRemoteConfigs: [[url: 'http://192.168.85.145/root/lover.git']]])

}

}

stage('通过maven构建项目') {

steps {

sh '/var/jenkins_home/maven/bin/mvn clean package -DskipTests'

}

}

stage('通过Docker制作自定义镜像') {

steps {

sh '''mv ./target/*.jar ./docker/

docker build -t ${JOB_NAME}:${tag} ./docker/'''

}

}

stage('将自定义镜像推送到harbor') {

steps {

sh '''docker login -u ${harborUser} -p ${harborPassword} ${harborAddress}

docker tag ${JOB_NAME}:${tag} ${harborAddress}/${harborRepo}/${JOB_NAME}:${tag}

docker push ${harborAddress}/${harborRepo}/${JOB_NAME}:${tag}'''

}

}

stage('将yml文件传到k8s-master上') {

steps {

sshPublisher(publishers: [sshPublisherDesc(configName: 'k8s', transfers: [sshTransfer(cleanRemote: false, excludes: '', execCommand: '', execTimeout: 120000, flatten: false, makeEmptyDirs: false, noDefaultExcludes: false, patternSeparator: '[, ]+', remoteDirectory: '', remoteDirectorySDF: false, removePrefix: '', sourceFiles: 'lover.yml')], usePromotionTimestamp: false, useWorkspaceInPromotion: false, verbose: false)])

}

}

stage('远程执行k8s-master的kubectl命令') {

steps {

sh 'ssh root@192.168.85.141 kubectl apply -f /usr/local/k8s/lover.yml'

}

}

}

}

10.9 运行流水线即可完成k8s部署

ip link set cni0 down && ip link set flannel.1 down

ip link delete cni0 && ip link delete flannel.1

systemctl restart containerd && systemctl restart kubelet

错误

1.docker service ls出错

没有正确加载/etc/docker/dameon.json,会无法从harbor拉取镜像

因此在k8s集群上每个节点执行以下操作,前提是配置好了harbor的地址文章来源:https://uudwc.com/A/woqLZ

docker swarm init

2.无法从harbor拉取镜像

看看是否镜像仓库是公开的!!私有的镜像仓库是需要配置密钥的!文章来源地址https://uudwc.com/A/woqLZ